Date: 18th March, 2024

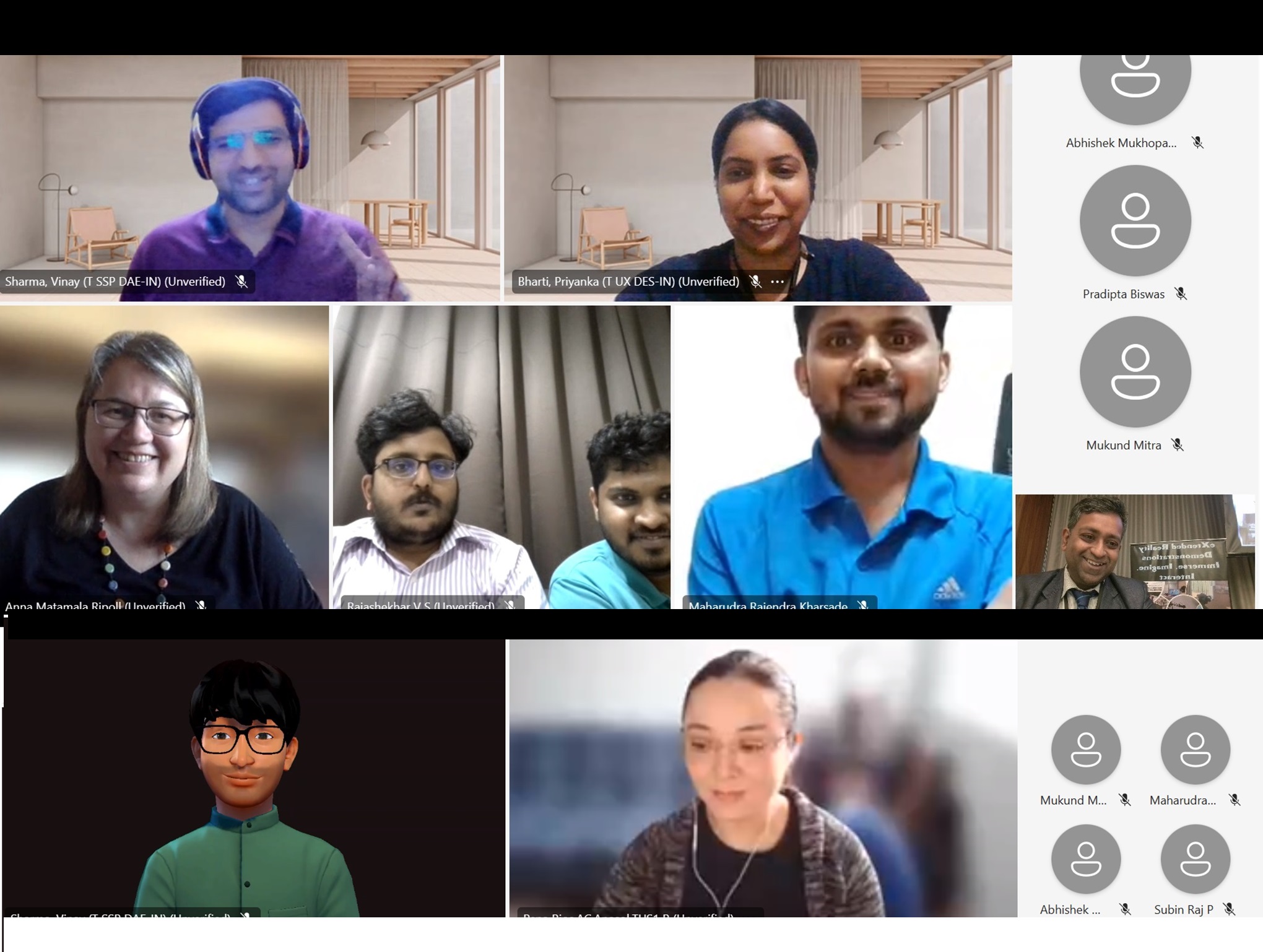

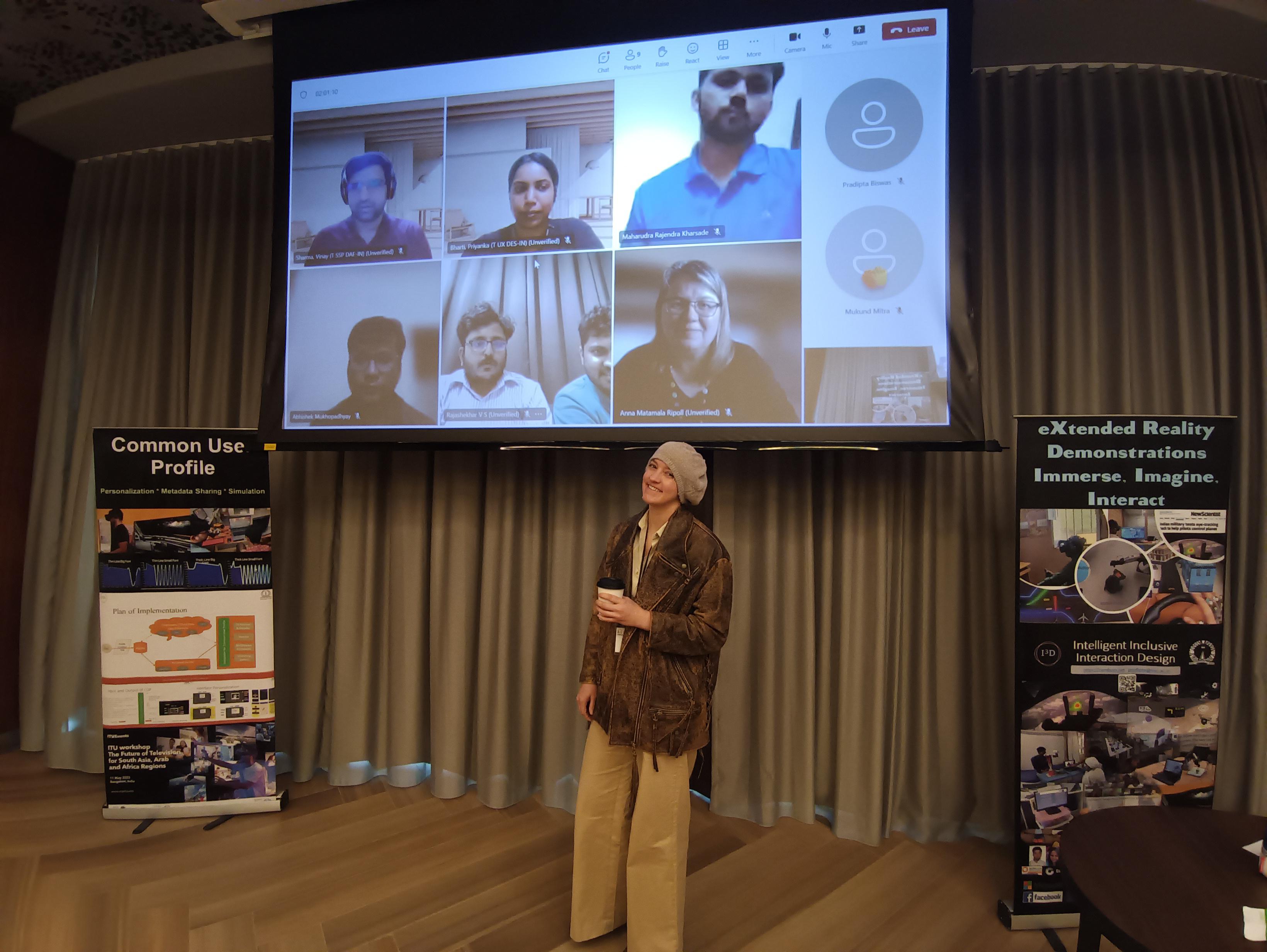

Link for Online Participants

Objectives

Investigating UI/UX challenges in Metaverse

Role of AI for improving user experience and scopes of generative AI in Metaverse

Scope and challenges for new modalities like gesture and eye tracking in Metaverse

Challenges in improving accessibility aspects of Metaverse for people with different range of abilities

Identifying gaps in standardization for Metaverse

Call for Papers

-

What is a metaverse? While the etymology about the constituent words meta and universe does not provide a clear definition, the term metaverse is often explained through interactive virtual space. The term first occurred in a 1992 science fiction novel Snow crash but in early 2000, gaming industry explored similar concept of virtual world with digital avatars of personas. Major industries used various terms like Metaverse (Meta/Facebook), Mesh (Microsoft), Nth Floor (Accesnture) and so on to invest and commercialize immersive media related products. To illustrate the industrial Metaverse, Siemens currently is developing between now and 2035 its own smart city, called Siemensstadt Square. Traditionally, immersive media is described through a continuum between reality and virtual reality along with intermediate systems known as Augmented and Mixed Reality systems. The International Standardization Organization defined mixed and augmented reality system as a system that uses a mixture of representations of physical world data and virtual world data as its presentation medium. This workshop will explore challenges and scopes related to user interface and user interaction experience (UI/UX) in Metaverse and investigate use of Artificial Intelligence (AI) including generative AI for improving UI/UX in Metaverse.

Papers accepted at this workshop will be published in IUI 24 workshop proceedings, published via CEUR-WS. These proceedings will be edited by workshop chairs.

Paper template (single column): Please click here

Regular papers need to be at least ten pages long (single column) to be published in CEUR-WS proceedings, and short papers need to have at least five pages (see https://ceur-ws.org/HOWTOSUBMIT.html).

Please click here to submit paper

Submission Deadline: Jan 16, 2024

Notification of Acceptance: Feb 9, 2024

Camera-Ready Papers Due: Mar 1, 2024

While Metaverse may be a new popular concept but researchers already explored interaction design for eXtended Reality systems. Use of AI in the form of object recognition in Augmented Reality system or speech recognition in Virtual Reality systems in widespread use but not explicitly considered as examples of Intelligent User Interface. However, AI technology has potential to introduce new interaction modality like eye and hand tracking for Metaverse and similarly improve interaction experience through emotion recognition, cognitive load estimation, target prediction or introducing new accessibility features in 3D media. Recent advancement in generative AI has been explored for generating synthetic data to improve object recognition accuracy in mixed reality system and Google RT2 explored using large language model to automatically generate instructions for robot manipulation, which can be extended to industrial metaverse. The organizers of this workshop have diverse range of expertise from developing VR crew cabin for Indias maiden human space flight mission, developing subtitles for VR media, designing industrial metaverse and marketing new headsets for data collection in Metaverse. In addition, the team is also actively involved in standardization activities through ISO and ITU and the workshop will directly contribute to assemble future use cases and associated challenges in Metaverse.

Workshop Schedule

Afternoon Session

- EDT 1400 / CET 1900 / GMT 1800, Paper Presentations

Towards and accessible metaverse: user requirements Anna Matamala (Universitat Autonoma de Barcelona)*; Estella Oncins (Universitat Autonoma de Barcelona)

The Metaverse and the Future of Education, Anasol Pena-Rios (British Telecom, UK)

Learning Human-to-Robot Handovers from Demonstrations Mukund Mitra (Indian Institute of Science, Bengaluru)*; Partha P Chakrabarti (Indian Institute of Technology Kharagpur); Pradipta Biswas (Indian Institute of Science)

EDT 1500, Aria Research Kit Demo

Project Aria glasses utilize groundbreaking technology to help researchers gather information from the users perspective, contributing to the advancement of egocentric research in machine perception and augmented reality. The sensors on the Project Aria glasses capture the wearers video and audio, as well as their eye tracking and location information. Example use cases include:

Wayfinding for indoor and outdoor spaces ? See CMU Case Study

Understanding daily life from a first-person POV ? Learn more about Ego4D

Researching AR in-motion and in-vehicle use cases ? See BMW Case Study

AI assistant with egocentric context from physical life

- EDT 1700, Closing Remarks - Pradipta Biswas, IISc

Organizers:

Prof Pradipta Biswas*, Indian Institute of Science, pradipta@iisc.ac.in

Dr Vinay Krishna Sharma, Siemens, sharma.vinay@siemens.com

Prof Pilar Orero, Universitat Autonoma De Barcelona, pilar.orero@uab.cat

Dr Eryn Whitworth, Meta, eryn@meta.com

Dr Anasol Pena-Rios, British Telecom, anasol.penarios@bt.com

Publications

- S. Saren, A. Mukhopadhyay, D. Ghose, P. Biswas, Comparing alternative modalities in the context of multimodal humanrobot interaction, Journal on Multimodal User Interfaces 2023, Springer

- S. Raj, LRD Murthy, T Adhithiyan, A. Chakrabarti, P. Biswas, Mixed Reality and Deep Learning based System for Assisting Assembly Process, Journal on Multimodal User Interfaces 2023, Springer

- AMC Rao, S. Raj, AK Shah, Harshitha BR, NR Talwar, VK Sharma, Sanjana M, H Vishvakarma, Development and comparison studies of XR interfaces for path definition in remote welding scenarios, Multimedia Tools and Applications 2023, Springer

- A Hebbar, AK Shah, S Vinod, A Pashilkar, P Biswas (2023). Cognitive load estimation in VR flight simulator. Journal of Eye Movement Research, 15(3)

- M Mitra, P Pati, VK Sharma, S Raj, PP Chakrabarti, P Biswas, Comparison of Target Prediction in VR and MR using Inverse Reinforcement Learning, ACM International Conference on Intelligent User Interfaces (IUI 23)

- A Mukhopadhyay, VK Sharma, PG Tatyarao, AK Shah, AMC Rao, PR Subin, P Biswas, A comparison study between XR interfaces for driver assistance in take over request, Transportation Engineering (11),2023,100159, Elsevier, ISSN 2666-691X, https://doi.org/10.1016/j.treng.2022.100159

- S Arjun, A Hebbar, S Vinod and P Biswas, VR Cognitive Load Dashboard for Flight Simulator, ACM Symposium on Eye Tracking Research and Applications (ETRA) 2022

- A. Mukhopadhyay, GS Rajshekar Reddy, S. Ghosh, P. Biswas, Validating Social Distancing through Deep Learning and VR-Based Digital Twins, ACM VRST 2021

- A. Mukhopadhyay, GS Rajshekar Reddy, KPS Saluja, S. Ghosh, A. PeƱa-Rios, G. K. Gopal, P. Biswas, A Virtual Reality-Based Digital Twin of Office Spaces with Social Distance Measurement Feature, Virtual Reality & Intelligent Hardware, Elsevier

- S. Arjun, GS Rajshekar, A. Mukhopadhyay, S. Vinod and P. Biswas, Evaluating Visual Variables in a Virtual Reality Environment, British HCI 2021

- A. Mukhopadhyay, GS Rajshekar Reddy, I. Mukherjee, G. K. Gopal, A. PeƱa-Rios, P. Biswas, Generating Synthetic Data for Deep Learning using VR Digital Twin, 3rd International Conference on Virtual Reality and Image Processing (VRIP 2021)

ACM International Conference on Intelligent User Interface 2024

ACM International Conference on Intelligent User Interface 2024