Automotive UI for Next Generation Car Cockpit

Interaction Design and Distraction Detection of Drivers in Automotive

Gowdham PrabhakarSupervisor: Dr. Pradipta Biswas

I3D Lab, CPDM, IISc Bangalore

Abstract: As distraction of drivers lead to car crashes and fatal accidents, the research community investigated detecting and reducing such distractions. Operating secondary task while driving is one of the key reasons for driver distraction. It is challenging to detect the inattention blindness of drivers compared to detecting instances of eyes-off road. Recent studies have found that high perceptional load results in increased inattention blindness. This dissertation investigates methods to reduce driver distraction caused due to operating secondary tasks. It proposes new interactive technologies involving virtual touch and eye gaze tracker to undertake secondary tasks in both head down and head up displays. It also proposes a new machine learning model to estimate cognitive load from ocular parameters and validate with respect to EEG parameters from studies involving professional drivers operating real vehicles. Finally, grounded theory method of qualitative research was used to understand and explore concerns and issues of professional drivers, resolve them, and look for factors contributing to acceptance of the proposed interaction technologies. I have described the brief structure of my thesis as follows.

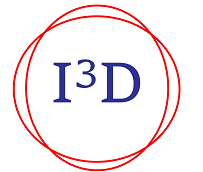

Figure 1. Ford Model T Dashboard Design 1909

In 1886, two German engineers, Benz and Daimler started the first automobile company, Mercedes-Benz. Mr. Daimler worked on IC (Internal Combustion) engine and initially he tested it on a bicycle while Mr. Benz designed a vehicle that was patented in 1886. Daimler’s vehicle appeared few months later. During similar time in North America, George B. Selden patented his automobile engine and its application in car in 1895 while Henry Ford introduced the iconic Model T (Figure 1) in 1911. In 1926, two-third of running cars were model T. It may be noted that although the first automotive was patented in late 18th century, it is not until 1924, Kelly’s Motors installed the car radio, which can be considered as the first secondary task in addition to driving. In 1939, Packard introduced the first air-conditioned car. In 1960s, the tape music players were installed in cars while in 1980s, the CD (Compact Disk) player was introduced in cars. In 2000s, MP3 players became common car. In 2011, Pioneer introduced the smartphone-based head unit with iOS support. In 2012, Tesla introduced the full size single digital display for secondary tasks.

Early cars like Model T had minimal controls to operate the vehicle. The user interface in cars became complex with the introduction of music player and air conditioner like Packard. The digital interfaces in Tesla cars offered the users to personalize interaction in terms of hierarchy of icons displayed. However, the single large display may increase visual search time of appropriate screen element and in turn increase the duration of eyes-off-road. This increase in complexity in car dashboards required researchers in improving the UI (User Interface) and decrease the distraction of drivers while operating the UI. This dissertation hypothesized that undertaking secondary tasks using eye gaze and virtual touch system can improve driving performance and in parallel can be used to accurately estimate drivers’ distraction and cognitive load. The literature review discussed the history of automotive HMIs (Human Machine Interfaces) and elaborated the challenges in current HMIs (Figure 2). It discussed literature survey on existing input modalities for interactive devices like touchscreen, speech, gesture recognition and eye gaze tracking systems that are already deployed or tested in commercial vehicles.

Figure 2. Existing input modalities of automotive

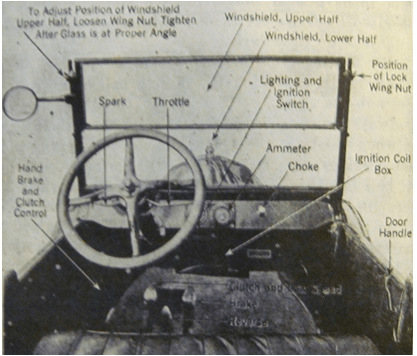

Figure 3. Wearable laser-controlled HDD

Consumer electronics like television and computer monitors already explored modalities like remote controller, mouse, keyboard, and touch. The touch interface has been used widely because of its ease of accessibility but it is challenging to use touch modality to operate at a distance. Virtual touch technology helps in achieving touch operation at a distance away from the display. I explored different modalities for operating a Graphical User Interface (GUI) through virtual touch. I proposed two new interaction devices using a laser pointer with hardware switch as well as laser pointer with eye gaze switch (Figure 3) which do not require drivers to physically touch a display. I compared their performances with a touchscreen in automotive environment by measuring the pointing and selection time for a secondary task, deviation from the lane, average speed, variation in steering angle, cognitive load, and system usability. Results found that the laser tracker system with eye gaze switch did not significantly degrade driving or pointing performance compared to the touchscreen in standard ISO 26022 lane changing task.

Though virtual touch is useful for existing HDD, they still involve drivers to take their eyes off the road for interaction. I investigated a see-through Heads-Up display (HUD) and explored hands free interaction techniques in the form of eye gaze-controlled interface. The gaze-controlled interface allows the user to look at the intended location on the screen to select the intended target. The proposed interactive HUD system (Figure 4) can be deployed on the windshield of a vehicle and does not occlude road vision. The gaze-controlled HUD system is tested in both driving simulator and actual vehicles. Drivers could operate the display as fast as existing touchscreen with improvement in driving performance in comparison to existing touchscreen-based HDD. In addition to developing new technologies to facilitatate automotive user interaction, I also proposed new technology to estimate cognitive load of drivers in real-time.

Figure 4. Gaze-controlled HUD

Figure 5. Cognitive Load Monitoring Dashboard

I investigated distraction detection of drivers by estimating their cognitive load while operating secondary tasks. My studies analyzed different physiological parameters involving pupil dilation, rate of fixation, saccadic intrusion, head movement, and EEG (Electroencephalogram). Initially, a series of studies were conducted in laboratory involving standard psychometric tests like N-back and Arithmatic tests followed by trials involving driving simulator. I also undertook studies involving professional drivers performing different secondary tasks while driving actual car to validate performance of the proposed system. I used machine learning methods to train on data of multiple drivers to learn a global threshold as it was difficult to find a single threshold for all the drivers to classify cognitive states. An intelligent system generating visual, auditory, and haptic alerts was developed and integrated to the proposed distraction detection system. I also developed a cognitive load monitoring dashboard (Figure 5) for comparing HMIs in automotive with real time graphical feedback on ocular parameters and cognitive load.

Despite quantitatively analyzing the efficacy of new technologies in real-time, they will be preferred and adopted in industries only if the technologies are accepted by the consumers (drivers). A qualitative study on wearable laser-controller based HDD and eye gaze controlled HUD contributed to explore and understand concerns of professional drivers in India and how these concerns could be addressed. Drivers reported about higher response and malfunctioning of touchscreen at crucial times like during picking up passengers and not meeting their psychological needs like desire for control, positive moods and so on. It was found that wearable laser-controller based HDD contributed to lowering technological anxiety by enhancing the notion of safety. Familiarity and learnability facilitate ease of use for eye gaze controlled HUD. The ability of participants to move freely in the seat to use an interaction system influences their notion of perceived risk of accidents, which could be further improved by accurate and quick selection of icons. Our study also gave insights about the purchasing power of professional drivers. The factors influencing buying motives were also discovered. All these findings helped to form a theory of acceptance and further improve wearable laser-controlled HDD and gaze-controlled HUD.

Figure 6. User accpetance of gaze-controlled HUD

Figure 7. Gaze-controlled Interaction in Aviation

Finally, I summarized the dissertation and discussed the potential in future direction. I have discussed the application of my research outcomes in domains other than automotive like large screen interaction, aviation (Figure 7), and smart manufacturing. The cognitive load estimation system was used to estimate workload of pilots in both simulation and in-flight studies while the laser tracker based virtual touch system is integrated to an interactive sensor dashboard of a smart manufacturing system. The laser tracker system is also used for operating large screen displays with click and drag feature used for on-screen drawing. As part of this research I set up a flexible automotive UI testbed involving a driving simulator, eye gaze, EEG trackers and associated software that can be used to compare and test performances of automotive HMIs in a plug and play fashion. Finally, I have discussed about my future proposals for post-doctoral work.

Publications

- Prabhakar, G., Mukhopadhyay, A., MURTHY, L., Modiksha, M. A. D. A. N., & Biswas, P. (2020). Cognitive load estimation using Ocular Parameters in Automotive. Transportation Engineering, 100008. [Journal] [Link]

- Prabhakar, G., Ramakrishnan, A., Murthy, L. R. D., Sharma, V. K., Madan, M., Deshmukh, S. and Biswas, P. Interactive Gaze & Finger controlled HUD for Cars, Journal of Multimodal User Interface, Springer, 2019 [Journal][Link]

- Prabhakar, G. and Biswas, P. (2018). Eye Gaze Controlled Projected Display in Automotive and Military Aviation Environments. Multimodal Technologies and Interaction, 2(1) [Journal] [Link]

- Prabhakar, G., Madhu, N. & Biswas, P. (2018). Comparing Pupil Dilation, Head Movement, and EEG for Distraction Detection of Drivers, Proceedings of the 32nd British Human Computer Interaction Conference 2018 (British HCI 18) [Link]

- Prabhakar, G., & Biswas, P. (2017, July). Evaluation of laser pointer as a pointing device in automotive. In Intelligent Computing, Instrumentation and Control Technologies (ICICICT), 2017 International Conference on (pp. 364-371). IEEE. [Best Paper Award] [Link]

- Prabhakar, G., Rajesh, J., & Biswas, P. (2016, December). Comparison of three hand movement tracking sensors as cursor controllers. In Control, Instrumentation, Communication and Computational Technologies (ICCICCT), 2016 International Conference on (pp. 358-364). IEEE. [Best Paper Award] [Link]

- Prabhakar, G. and Biswas, P. (2017, September). Interaction Design and Distraction Detection in Automotive UI, ACM Automotive UI Doctoral Consortium (ACM Automotive UI 2017) [ACM Travel Award] [Link]

- Biswas, P., & Prabhakar, G. (2018). Detecting drivers cognitive load from saccadic intrusion. Transportation research part F: traffic psychology and behaviour, 54, 63-78. [Journal] [Link]

- Babu, M. D., JeevithaShree, D. V., Prabhakar, G., Saluja, K. P. S., Pashilkar, A., & Biswas, P. (2019). Estimating Pilots Cognitive Load From Ocular Parameters Through Simulation and In-Flight Studies. Journal of Eye Movement Research, 12(3), 3. [Journal] [Link]

- Biswas, P., Prabhakar, G., Rajesh, J., Pandit, K., & Halder, A. (2017, July). Improving eye gaze controlled car dashboard using simulated annealing. In Proceedings of the 31st British Computer Society Human Computer Interaction Conference(p. 39). [Link]

- Aprana, R., Modiksha, M., Prabhakar, G., Deshmukh, S., Biswas, P., (2019, October). Eye Gaze Controlled Head-up Display. In International Conference on ICT for Sustainable Development 2019 [Link]

- Biswas, P., Roy, S., Prabhakar, G., Rajesh, J., Arjun, S., Arora, M., & Chakrabarti, A. (2017, July). Interactive sensor visualization for smart manufacturing system. In Proceedings of the 31st British Computer Society Human Computer Interaction Conference (p. 99). [Link]

- Babu, M. D., Biswas, P., Prabhakar, G., JeevithaShree, D. V., and Murthy, L. R. D. Eye Gaze Tracking in Military Aviation, Indian Air Force AVIAMAT 2018 [Link]

- Babu, M. D., JeevithaShree, D. V., Prabhakar, G., and Biswas, P. Using Eye Gaze Tracker to Automatically Estimate Pilots Cognitive Load, 50th International Symposium of the Society for Flight Test Engineer (SFTE) [Link]

- Biswas, P., Saluja, K. S., Arjun, S., Murthy, L. R. D., Prabhakar, G., Sharma, V. K., & DV, J. S. (2020). COVID 19 Data Visualization through Automatic Phase Detection. [Link]

- Prabhakar, G., and Biswas, P. Wearable Laser Pointer for Automotive User Interface, Application No.: 201741035044, PCT International Application No. PCT/IB2018/057680 [Link]

- Prabhakar, G., Biswas, P, Deshmukh, S., Modiksha, M., and Mukhopadhyay, A., System and Method for Monitoring Cognitive Load of a Driver of a Vehicle, Indian Patent Application No.: 201941052358

- Biswas, P., Deshmukh, S., Prabhakar, G., Modiksha, M., Sharma, V., K. and Ramakrishnan A., A System for Man- Machine Interaction in Vehicles, Indian Patent Application No.: 201941009219